Polling has looked surprisingly good for Democrats. Are they being set up for disappointment again?

It just seems to keep on happening — Democrats get their hopes up from rosy-looking polls, but they get a rude awakening when votes are tallied on election night.

In 2016, Trump’s win shocked the world. In 2020, a seeming Democratic romp turned into a nail-biter. And now, as the 2022 midterms are drawing nearer, polls show Democrats performing surprisingly decently — pointing toward a close election rather than the long-expected GOP wave.

Unless, of course, the polls are just underestimating Republicans again.

And lately, there’s been a debate among election analysts, including the New York Times’s Nate Cohn and FiveThirtyEight’s Nate Silver, about whether that’s exactly what we should expect this time.

It has always been a good idea to treat polls, poll averages, and election forecasts with some healthy skepticism. They’re all good at getting us in the neighborhood of the outcome, most of the time. But in any given cycle polls are frequently off by a few points on average, and they can miss by much more in individual races while being on target in others.

So sure, polls can be wrong. The debate here is over a different question: Have polls so persistently underestimated Republican candidates of late that it’s simple common sense to suspect it’s happening again?

Or is the recent polling error tougher to generalize about, meaning that we should be more hesitant to suspect a bias against the GOP, and that Democrats maybe shouldn’t feel so anxious?

My own view is that it makes all the sense in the world to be deeply skeptical of polls showing big Democratic leads in states like Wisconsin and Ohio, where polls have consistently greatly overestimated Democrats across several election cycles. But the picture is less clear in other states, where polling error hasn’t been so clear or consistent. I wouldn’t blindly “trust” those polls, but I wouldn’t assume they’re likely wrong, either.

What was wrong with the polls?

The last cycle in which Democrats really felt the polls didn’t set them up for disappointment was 2012. Polls that year did fluctuate somewhat, but they usually showed President Obama as the favorite to win reelection, and forecast models based on those polls did the same.

There was, however, a dissenter — Dean Chambers, founder of the website “Unskewed Polls.” Chambers, a conservative, argued that most pollsters were systematically undercounting Republican voters. So he re-weighted their results to reflect the more-Romney-leaning electorate he expected — “unskewing them.”

Much mockery from liberals about this rather crude methodology ensued, and when the results of the election came in, Chambers got egg on his face — Obama and Democrats actually did somewhat better than the polls had showed.

Here’s the funny part: In every election cycle since then, Chambers would have had a point.

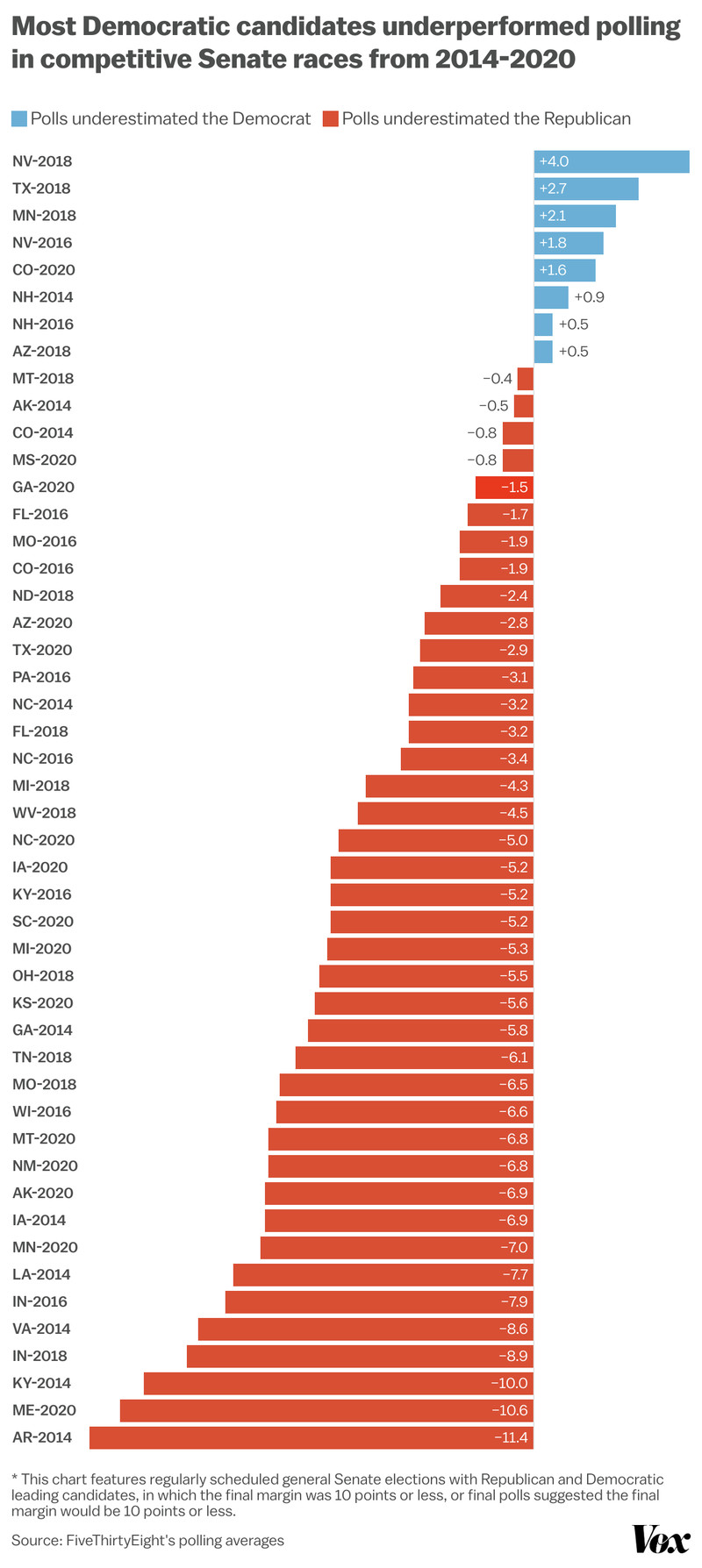

Andrew Prokop / Vox

First came the 2014 midterms, a GOP wave year. The final Senate polls correctly indicated a Republican takeover, but they understated the size of GOP victories in almost every competitive race, by nearly 6 points on average. National House polling showed a similar discrepancy.

In 2016, it happened again. National presidential and House polls were fairly close to the results, but in most presidential swing states, polls underestimated Trump. Polls also underestimated GOP Senate candidates in competitive contests by about 3 points on average.

In the 2018 midterms, then, there was another discrepancy between national House polling (which was fairly close to accurate) and competitive Senate state polling (where Republicans were underestimated by 2.5 points on average).

And in 2020, polls had their worst performance in decades, because they significantly overestimated Democrats’ margins at nearly every level — presidential popular vote, presidential swing states, Senate swing states, and the House — by an average of nearly 5 points.

So, over the last four cycles, national polls have twice been reasonably accurate and twice underestimated Republicans. But relevant for our purposes this year, polls of competitive Senate races underestimated Republicans in all four election cycles. (And, of course, presidential swing state polls underestimated Trump twice, though that’s more relevant for 2024.)

Why were the polls off?

A polling error of about 3 points on average is actually pretty normal. All polling is an inexact science attempting to model the opinion of a large population based on a sample of a small part of that population. Things could go awry in sampling (if certain voters are more difficult for the pollster to reach), or in weighting (as pollsters try to ensure their sample is representative of the electorate, they may make incorrect assumptions about rates at which demographics are likely to turn out). Additionally, undecided voters making up their minds at the very last minute break could disproportionately to one candidate or side. These things happen!

But if polls are consistently erring, over multiple cycles, in the same partisan direction, and often in the same states or regions, that may indicate a fundamental problem.

Part of the recent debate among election analysts is about whether that has actually happened — that is, in how we should interpret those last few cycles of poll results. Has there been a consistent overestimation of Democrats — meaning, a problem of pollsters reaching Trump-supporting Republicans? Or has it been a more mixed set of results from which people are over-reading patterns?

If you look at Senate polling of competitive contests from 2014 to 2020, and swing state presidential polling in 2016 and 2020, the pattern of bias seems quite plain: Polling underestimated Republicans far more often than Democrats in these contests, which stretch across several cycles at this point. Often, these errors were most pronounced in certain states or regions, such as Rust Belt states or very red states. So Cohn sees “warning signs” that recent polls may be overestimating Democrats in those same states, an “artifact of persistent and unaddressed biases in survey research.”

Silver takes a broader view, incorporating polling nationally, of governor’s races, and of off-year and special elections into his analysis, and concludes that the picture looks more mixed. He argues that polls have either been pretty close or even underestimated Democrats in various elections in 2017, 2021, and 2022 (particularly after the Dobbs decision). He views 2018 in particular as a mixed bag, not demonstrating a “systematic Democratic bias.” And he posits that perhaps “Republicans benefit from higher turnout only when Trump himself is on the ballot,” meaning that 2016 and 2020 might be the wrong elections to focus on when thinking about this year.

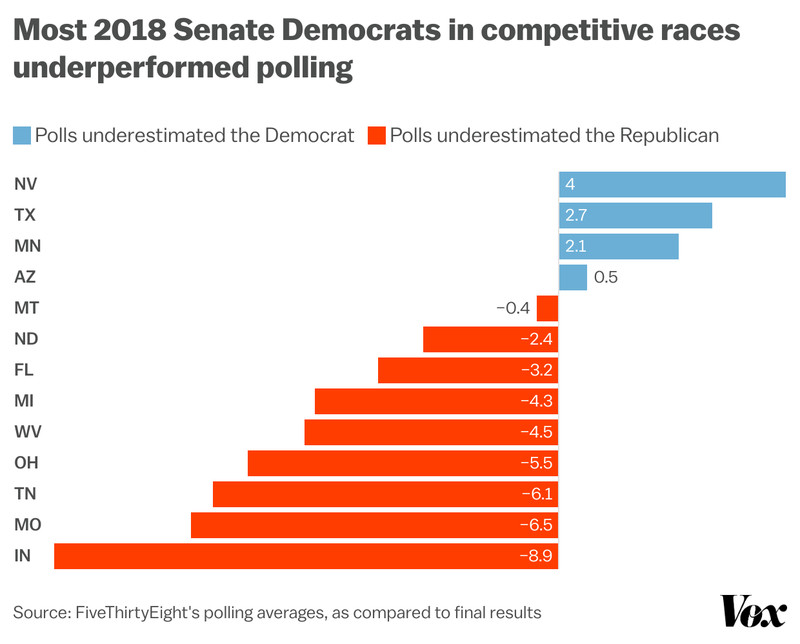

A closer look at 2018

I have a different interpretation of polls’ performance in 2018 than Silver, though. According to his numbers, polling averages underestimated Democrats by about 1 point on average in the House and in governor’s races, and there was no partisan bias in Senate polls on average that year.

But there’s a catch: The Senate map that year had an unusually large amount of contests in solidly blue states, none of which proved to be competitive. Democrats outperformed polls in nearly all of those contests.

Yet if we look at 2018’s actually competitive races — which that year were in purple and red states — most Democratic candidates underperformed their polls, and often by quite a lot.

The final margin was more than 3 points more unfavorable to the Democrat than FiveThirtyEight’s final polling averages in Florida, West Virginia, Michigan, Ohio, Tennessee, Missouri, and Indiana. There was only one competitive state — Nevada — in which the Democrat outperformed polls by more than 3 points.

So, for the purposes of someone trying to figure out which way the Senate would tip, the polls did functionally underestimate Republicans in 2018 too.

Here’s another caveat, though: 2022’s competitive Senate map does not look like 2018’s. That year, Democrats were defending 10 seats in states Trump won two years prior, including many deep red states (including North Dakota, Indiana, and Missouri, where some of the biggest polling errors were). 2014’s competitive map, another year where the polls significantly underestimated the GOP, was similarly red. But in 2022, Democrats’ top seats to defend or pick up are in pure purple states that Biden won narrowly: Georgia, Nevada, Arizona, Pennsylvania, and Wisconsin.

The trick about trying to draw lessons from history is that nothing will ever be identical. Each situation is new and will have similarities and differences to things that happened in the past. A comparison necessitates choosing certain past events to examine, while omitting others. And the more past events you look at, the more conflicting evidence you’ll find.

Recent Comments